Hyper Historian's Data Exporter is a powerful, high-performance extension module for Hyper Historian that makes it easier to regularly export your logged data to third party storage components such as Microsoft SQL Server, Azure SQL, and Azure Data Lake.

Data Exporters work by scheduling tasks to sort logged data into a dataset and then transfer that dataset into a storage space, such as a data lake.

Note: Manual (on-demand) synchronization will be supported at a later date.

Users who previously used the Hyper Reader – a command-line tool to export data from Hyper Historian into a SQL Server database – should be able to use data exporters to accomplish the same tasks without having to use the command line. The Hyper Reader is still included for backwards compatibility, but we expect users will prefer to use the Data Exporter and would recommend it for new projects.

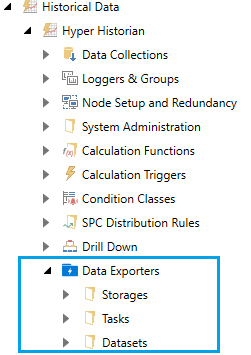

Data exporters are configured in Workbench under Historical Data > Hyper Historian > Data Exporters:

Location of Data Exporters

The Storage folder is where you configure the connections to data storage, such as SQL Server, Azure SQL, or Azure Data Lake.

The Datasets folder is where you configure the tags, filters, and the format (columns) of the data you want to export. The data can be metadata, raw, or aggregated values.

The Tasks folder is where you choose a storage and one or more datasets and configure how often you would like the data to be exported. Each time the task runs, it will export the data logged between this run and the last run.

Note: The first time a task runs it will not actually copy any data. It just marks the beginning of the range of data to copy. The Synchronization Offset configured on a task can be used to copy slightly further back than the last task run. The actual start time of the copy is the last run minus the synchronization offset, and the end time is the present.

The data explorer currently supports these storage types:

Microsoft Azure Data Lake

Microsoft Azure SQL

Microsoft SQL Server

We hope to add more storage types in the future, such as Hadoop Distributed File System (HDFS).

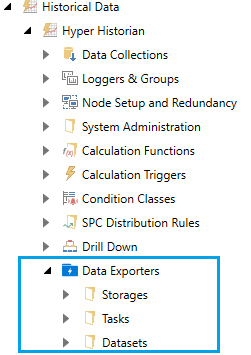

The Hyper Historian Data Exporter has its own set of configuration tables, which must be added to the same database as the Hyper Historian configuration.

To add the Data Exporter to an existing project, open Workbench, go to the Project tab, and select Configure Database. Choose your unified configuration or Hyper Historian database.

On the Install applications configuration in a database page, check to see if the Installed column is checked for the Hyper Historian Data Exporter. If it is already checked, you can cancel out of this dialog. If it is not checked, check the Install/Overwrite box for the Hyper Historian Data Exporter, then click Install.

Installing the Data Exporter Tables

Once the configuration has been installed, right-click on your project and select Configure Application(s) Settings. Make sure that the Hyper Historian Data Exporter is enabled and configured to use the same database as Hyper Historian.

Lastly, you will need to make sure the extension is enabled under Historical Data > Hyper Historian > System Administration > Installed Extensions. If you do not plan to use the Data Exporter, you can disable it on this page to save system resources.

Note: The Hyper Historian’s Installed Extensions page may be missing from Beta 1. It will be included by the final release. The extension should be enabled by default if the proper configuration database exists, even though this page cannot be checked. If you see the Data Exporter folder under Hyper Historian, it is enabled.

This example will walk you through configuring an export task to copy data into a SQL Server database.

In Workbench, expand Historical Data > Hyper Historian > Data Exporters.

Right-click on Storage create a new data storage.

Name this storage, Local SQL.

For Connection Type, select SQL / Azure SQL.

Select the Configure connection hyperlink under Connection String.

Follow the dialog to connect to your SQL Server database. Unless you have a specific database in mind, we recommend creating a new database by typing a name into the Database field. (The credentials you provide must have the proper permission to create a database.)

Note: If you installed SQL Express with your ICONICS installation, that instance will be named localhost\SQLEXPRESS2017.

You should now have a connection string in the Connection String field.

If desired, adjust the settings in the Advanced section.

Click Apply.

Right-click on Datasets and add a dataset definition.

Name this definition SimulatedData.

Go to the Columns tab.

If desired, add more columns or modify the existing columns.

Go to the Filters tab.

Click to add a new item in the Filters table.

On the new row, click in the Path Filter column and select the folder  button.

button.

Select the Various Signals folder. Select OK, then Apply. This will export data for all the tags in the Various Signals folder.

If desired you could further filter the tags in that folder by using the Point Name Filter column, or specific data types by using the Variable Type Filter.

The Variable Type Filter column accepts these values:

R (for raw data)

C (for calculated data)

A (for aggregated data)

Note: If you have specific tags you would like to log, you can use the Tags table instead of Filters. If both the Tags and Filters are enabled, tags that meet either condition will be included. (It is an “or” relationship.)

This example was for raw data. If you would like to export aggregated data, make sure to select Aggregated for Storage data on the Dataset definition tab, then configure the Aggregate Options. To choose the actual aggregate, on the Columns tab, add a column with a Column Value Type of Value and a Resampled Value Type of your desired aggregate.

Right-click on Tasks and add a synchronization task.

Name this task SignalsTask.

Under Storage, select the Local SQL connection, which we created earlier.

For Trigger, click on the trigger icon ![]() and browse for Date/Time Triggers > Every Minute.

and browse for Date/Time Triggers > Every Minute.

In the Data Storage Schedule section, set Recur every to one hour.

Set the Starting at time to be on the hour (such as 1:00, 2:00, etc.), and make sure it is in the past. (The exact date does not matter.)

Note: The Data Storage Schedule determines when a new table will be created in the data storage. With these settings, we will get a new table created once an hour, on the hour.

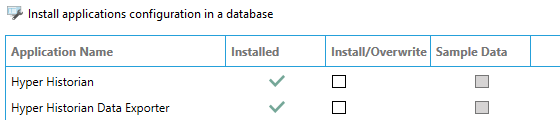

Example Table Format

Go to the Datasets tab.

Select Add and browse for the SimulatedData dataset we created earlier.

Hit Apply.

Start the Hyper Historian Logger, if it was not already running.

Note: The data exporter supports online changes. A restart is not needed if data exporter changes are made when the logger was already running.

Once logging is started, within one minute you should be able to see new tables in your storage database.

Datasets and storage connection strings can be aliased (or parameterized), allowing you to create one dataset or one storage and reuse them in multiple tasks to do slightly different things.

The following example will walk you through aliasing the folder of tags to export from the previous example.

Edit your SimulatedData dataset.

Go to the Filters tab.

Replace Signals* with <<Path>> (including the greater than and less than signs).

Apply your changes.

Edit your SignalsTask task.

On the Datasets tab you will see a specific field for Aliases. In that field, enter Path="Signals*";

Apply the changes.

Add a second task to the Tasks folder.

Give this task a unique name and configure it the same as SignalsTask, except in Aliases, enter Path="ScanRates*";

Apply the changes. Observe that you now have two tasks creating two sets of tables for two different folders of tags.

If you have multiple aliases in your dataset or storage you can specify them all in the task by separating them with semicolons, such as:

Alias1=Value1;Alias2=Value2

Note: We plan to implement an editor for the Alias field.

Note: Quotes around the alias value are optional. You may wish to use quotes if your value includes special characters, such as equal signs and semicolons.

The following fields can accept aliases:

Dataset > Filters tab > Data Point Name

Dataset > Filters tab > Point Name Filter

Dataset > Filters tab > Path Filter

Storage > Connection String

For a Microsoft Azure database, go to your Azure and browse for SQL database. Under Settings, you should see a Connection string.

Copy your Connection String and fill in the login credentials.

Crete a new storage in Workbench, set the Connection Type to SQL / Azure SQL, then paste your connection sting into the Connection String field. The final connection string should look something like this:

Server=tcp:YourAzureDatabaseName.database.windows.net,1433;Initial Catalog=YourCatalogName;Persist Security Info=False;User ID={your_username};Password={your_password};MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;

To export to an Azure Data Lake, create a new application registration (legacy) under your Azure Active Directory. Go to your Data Lake and Add Access of this Application Registration to Data Lake. Full permissions are required.

Note: See Microsoft Azure documentation on how to create a data lake. The exact steps on configuring a data lake may change.

Add Access Control for Application to your subscription. The Reader role should be enough.

In Workbench, create a new storage and set its Connection Type to Data Lake. Configure the Storage Connection section like so:

|

Workbench Field |

Where to Find the Value in Microsoft Azure |

|

Storage Name |

Data Lake Name under Data Explorer |

|

Root Folder |

Data Explorer (under Data Lake Name) |

|

Domain Name |

Azure Active Directory > Domain Names |

|

Application ID |

Azure Active Directory > App registrations |

|

Authentication |

Registered App > API Access > Keys |

Note: These locations depend on Microsoft’s organization of Azure and may change over time. Please consult Microsoft’s documentation if you are having trouble finding these values.

See Also: